Automated tagging, labeling, or describing of images is a crucial task in many applications, particularly in the preparation of datasets for machine learning. This is where image-to-text models come to the rescue. Among the leading image-to-text models are CLIP, BLIP, WD 1.4 (also known as WD14 or Waifu Diffusion 1.4 Tagger), and GPT-4V (Vision).

CLIP: A Revolutionary Leap

OpenAI’s Contrastive Language–Image Pretraining (CLIP) model has been widely recognized for its revolutionary approach to understanding and generating descriptions for images. CLIP leverages a large amount of internet text and image data to learn a multitude of visual concepts, thereby producing descriptive sentences for images.

However, according to user reviews, CLIP’s descriptive sentences can sometimes be redundant or overly verbose. A common criticism revolves around the model’s propensity to repeat similar descriptions for the same object or overemphasize certain attributes, such as the color of an object.

BLIP: Simplicity Meets Functionality

The BLIP model, while less detailed in its descriptions compared to CLIP, offers a simpler and more direct approach to image-to-text processing. As one reviewer noted, BLIP is “cool and all, but it’s pretty basic.” This model’s simplicity can be an advantage for applications that require straightforward, less verbose tags or descriptions.

Nevertheless, some users found that BLIP’s output often lacks the depth and granularity provided by models like WD14. While it can generate satisfactory results, BLIP may not be the best choice for applications that demand detailed, complex tags.

I’ve found WD14, despite being anime-focused, works great for actual photos of people too. I usually combine it with BLIP and most of the times it picks up much more details than BLIP.

Toni Corvera at YouTube comments

Blip is cool and all, but its pretty basic.

WD 1.4 (WD14) tagging is way better – more detail, juicier tags.

OrphBean at GitHub

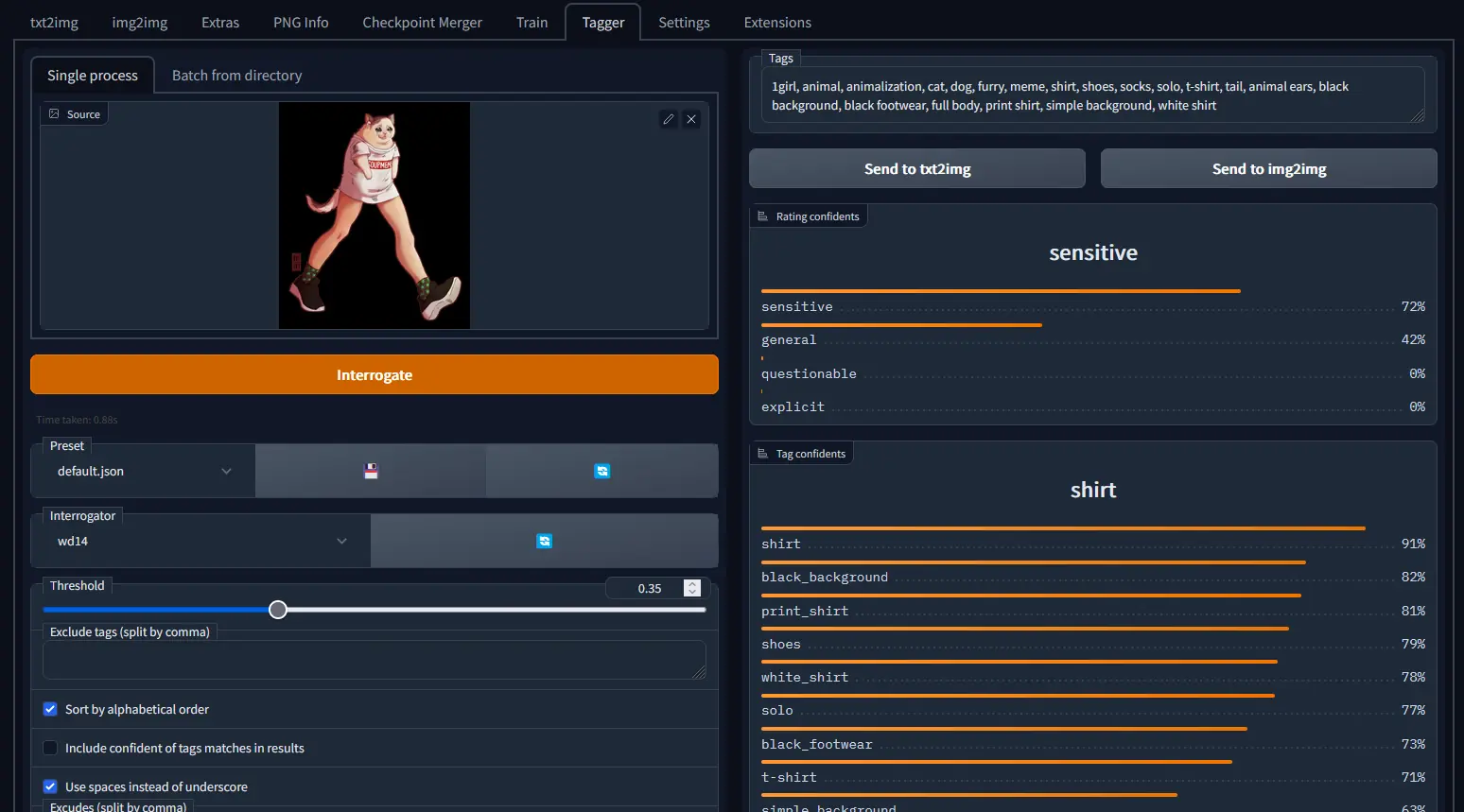

WD 1.4 (aka WD14): Precision in Detail

The WD 1.4 model (also known as WD14 or Waifu Diffusion 1.4 Tagger), initially designed for anime images, has demonstrated surprising versatility, performing well even with photos. Users have lauded its advanced configuration options and batch processing capabilities, which make it a robust tool for image-to-text translation.

What sets WD14 apart is its ability to generate detailed, “juicier” tags, providing more in-depth descriptions of images compared to its counterparts. While this model is less likely to produce spurious tags, its focus on anime might be a limitation for certain types of images.

Q: Is the WD14 tagger better than the BLIP or deepdanbooru built in Automatic1111?

A: The extension gives better options for configuration and batch processing, and I’ve found it less likely to produce completely spurious tags than deepdanbooru.CLIP/BLIP is different since those produce descriptive sentences rather than lists of tags, but the latter is usually more in line with my needs. And the built-in CLIP interrogator is prone to busting out things like “a picture of (description) and a picture of (slightly different description of the same thing” or “(mostly complete description) and pink hair and pink hair and pink hair and (repeat many times)”

Despite being made for anime, the WD14 tagger works pretty well on photos.

MorganTheDual at Reddit

GPT-4V (GPT-4 with Vision)

If you need to describe a not very large dataset of images, you can use Image Describer tool in ChatGPT. It’s not suitable for large batches of images, but it’s perfect for smaller image sets because it provides absolutely the best descriptions in natural language. Descriptions from this tool are very precise and detailed. It’s not just tags, but coherent and informative text that also takes into account the relationships between objects in the image.

Example:

Test image set:

Image Describer output for these 3 images:

You’ve uploaded three images. Here’s a description of each:

- The first image features an adorable, stylized cartoon kitten with large, expressive eyes. It is predominantly orange with white accents on its face, chest, and paws. The kitten is holding a pen, which is almost as big as itself, and is drawing a happy face on a bright yellow ball. The art style is very polished and has a kind of glossy finish. The background suggests a whimsical setting with colorful, abstract shapes and a suggestion of a starry sky.

- The second image appears to be a screenshot of a chat or comment section from a social media platform. Various users have left comments, many of which include laughter or amusement indicated by acronyms like “LMAO” and emojis. The usernames and profile pictures are pixelated for privacy, with a variety of colors displayed in the pixelation.

- The third image is a highly detailed and realistic illustration of a young woman with a friendly expression. She has long black hair, fair skin, and wears a purple hoodie. The woman is also wearing a headset, suggesting she might be a video game streamer or involved in some form of online communication. The setting appears to be a room illuminated with a purple hue, possibly from LED lights, indicative of a gaming setup.

Each image has a very different context and visual style, ranging from whimsical illustration to a screenshot of a digital interaction, to a more realistic digital painting.

Conclusion

Choosing between CLIP, BLIP, WD 1.4, and Image Describer depends largely on the specific needs of a project. If precision and detail are paramount, WD 1.4 offers a compelling option with its advanced configuration and detailed tagging capabilities. For simpler applications, BLIP’s straightforward approach might be more suitable. Meanwhile, CLIP provides a balance between detail and simplicity, albeit with a tendency for verbosity.

Image Describer provides the best results but is not suitable for describing or tagging large sets of images.

As these models continue to evolve and improve, they hold promising potential for a broad spectrum of applications, from content creation to data analysis. Despite their differences, CLIP, BLIP, WD 1.4, and GPT-Vision models are testament to the rapid advancements in image-to-text technology, each contributing unique strengths to this exciting field.