Santa Clara, CA – July 20, 2023 – Cerebras Systems, a leading provider of wafer-scale AI solutions, has announced a major deal with G42, an Abu Dhabi-based technology conglomerate. Cerebras will be building what is poised to become the world’s largest AI supercomputer for G42 over the next 18 months.

The Condor Galaxy: A Massive AI System

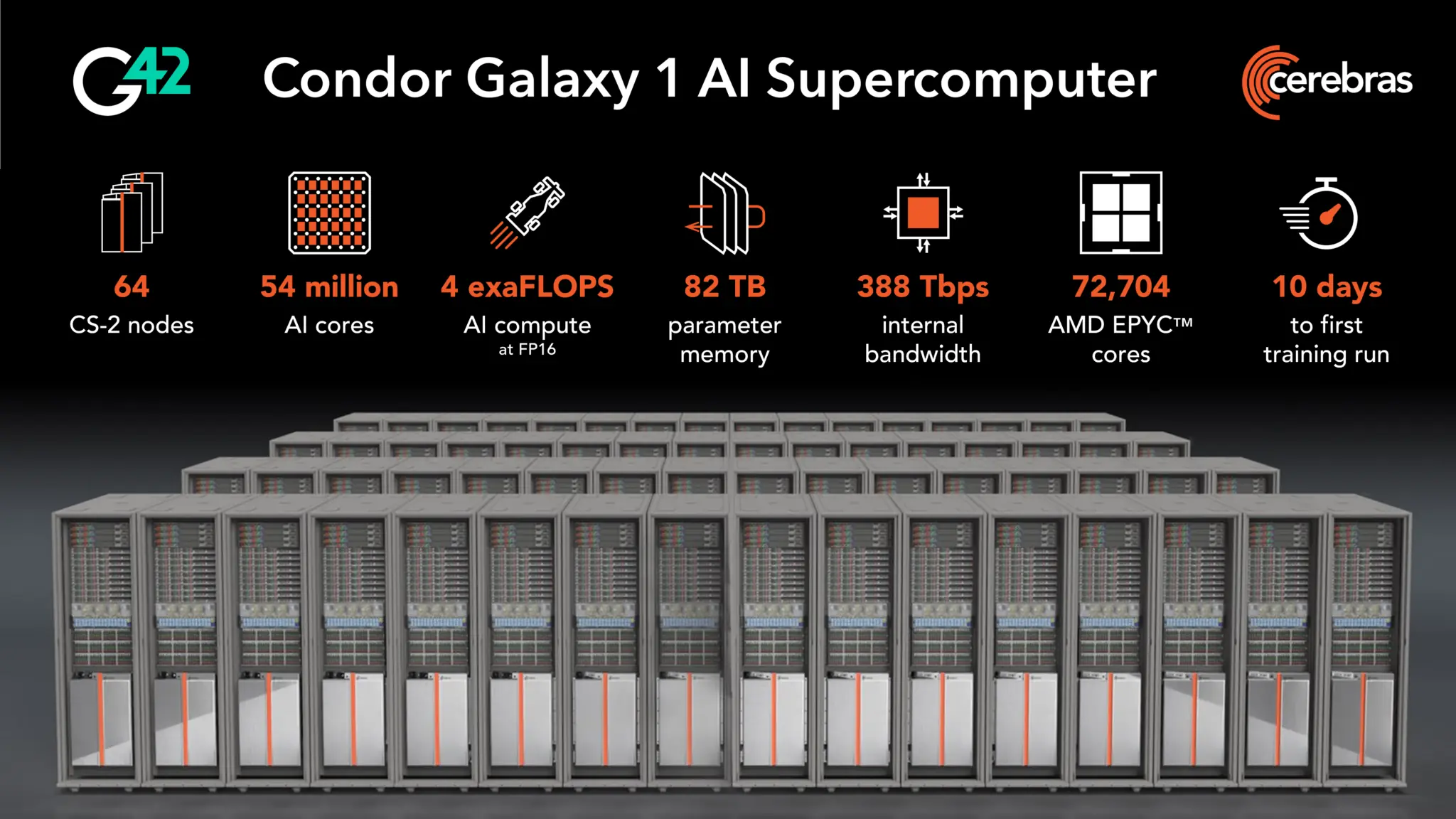

The new AI supercomputer will consist of multiple ‘Condor Galaxy’ systems, each made up of 64 of Cerebras’s revolutionary wafer-scale chips, the Cerebras Wafer Scale Engine 2 (WSE2).

The first Condor Galaxy system is already operational at Cerebras’s facility in Santa Clara. With 32 WSE2 chips currently installed, it provides 2 exaflops of AI compute power at FP16. By next month, it will double to 64 chips and 4 exaflops as more chips are added.

Two more 4 exaflop Condor Galaxy systems will come online in Austin, TX and Nashville, NC by June 2024. The three systems will be networked together into one massive AI machine.

Over 36 Exaflops by End of 2024

But the scope of the project goes far beyond just those initial 3 systems. Cerebras has agreements in place with G42 to build an additional 6 Condor Galaxy clusters, bringing the total to 9 systems in the next 18 months.

With each providing 4 exaflops of performance, the overall supercomputer will achieve a staggering 36 exaflops of AI compute power by the end of 2024 – making it the undisputed largest AI system in the world.

The additional 6 systems will be located outside of the United States, with exact locations still to be determined.

Revolutionizing AI with Wafer-Scale Computing

The massive scale of this project serves to validate Cerebras’s wafer-scale approach. Each WSE2 chip spans an entire 300mm wafer and packs 850,000 AI cores onto its massive 850sqcm surface.

By providing extreme density and tight integration of cores, memory, and fabric, wafer-scale technology overcomes the challenges of scaling AI workloads across multiple GPUs or standard CPUs. WSE2 allows even the largest AI models to run on one or few chips.

The giant AI supercomputer being built for G42 will become a proving ground for wafer-scale computing in large-scale production deployments of real-world AI applications.

Targeting Healthcare with M42

The supercomputer will be used to power G42’s ‘M42’ healthcare data and analytics platform, which aims to derive insights from vast amounts of patient health data coming from the UAE’s healthcare system. By training AI models on comprehensive medical datasets, G42 hopes to make predictive healthcare a reality.

With 9 Condor Galaxy clusters providing a total of 36 exaflops of performance, the upcoming G42 system will be a truly groundbreaking AI achievement. For Cerebras Systems, this massive project validates their revolutionary wafer-scale approach as the future of AI computing. The deal with G42 shows that wafer-scale systems are ready for large-scale commercial deployments and can change how the most challenging AI applications are run.

This is incredibly impressive, but I do wonder about the logistics of networking systems spread across multiple states. Very curious to see how they handle that.

Oh neat, using this for healthcare data analytics makes a lot of sense. Still confused though – what exactly can you do with a supercomputer of this size that you couldn’t with a normal system?

This is quite interesting, but I do wonder about the implications of such powerful AI systems being developed privately. What kinds of accountability and oversight measures are in place?

Wow, this is so cool! I can’t believe they’re building such a massive AI supercomputer. 36 exaflops?! That’s just mind blowing. I’d love to see this thing in action once it’s built!

Holy moly, 36 exaflops?! How in the world does that even work? This sounds like something straight out of a sci-fi movie. Are these wafer-scale chips really that powerful? I gotta learn more!

Wowee, talk about future tech! Exaflops and wafer-scale computing and AI models – it’s all so fascinating but way over my head. I wish I understood this high-tech stuff better…

But can it run Crysis tho?

– The supercomputer can achieve 36 exaflops of FP16 performance. This is very high AI performance, but exaflops is usually measured for FP64 performance, so it’s hard to directly compare to traditional supercomputers. 36 exaflops of FP16 performance ≠ 36 exaflops of FP64 performance.

– The cores run at low voltage (0.7V) and power (30mW per core), enabling the high core count.

– Cerebras chips are designed to bypass defective cores, so yield is not affected much.

– The customer, G42, is based in UAE and has ties to GlobalFoundries, a chip fab.

– Wafer shape affects core count density, but Cerebras uses standard square for manufacturing ease.

Why don’t they make the chip circular to better fit the wafer and increase core count?