Today, we will be discussing a groundbreaking paper that explores how researchers used stable diffusion, a type of generative AI, to reconstruct images based on human brain activity. This research not only has significant implications for neuroscience, but it also opens the door for a wide range of applications, from reading dreams to understanding animal perception.

Stable Diffusion and Decoding Brain Activity

Stable diffusion is an open-source generative AI capable of creating stunning images based on text prompts. In the paper, researchers trained stable diffusion on thousands of brain scans, exposing human participants to various images while recording their brain activity using a device called Memorize Cam. By training the model on the relationship between brain activity patterns and the corresponding images, the AI was able to reconstruct images based on the participants’ brain activity.

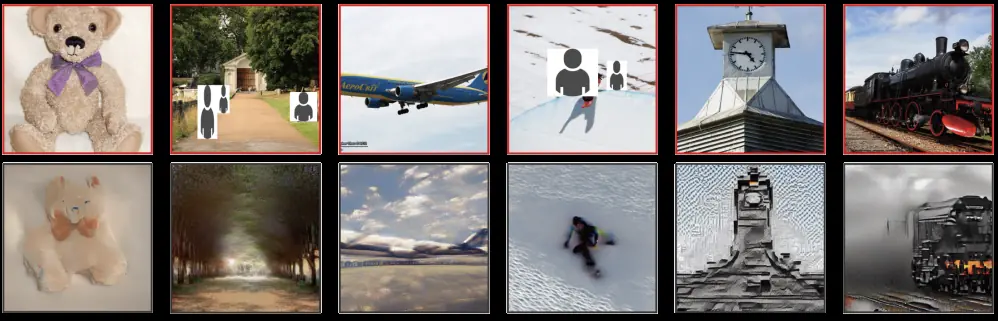

While not always perfect, the algorithm frequently produced accurate reconstructions of the original images, with the position and scale often matching precisely. The only notable difference was typically the color of certain elements. The success of this method is attributed to the combination of recent research in neuroscience and latent diffusion models.

Potential Applications and Future Challenges

There are numerous potential applications for this technology, including:

- Reading dreams, thoughts, and memories

- Understanding how animals perceive the world based on their brain activity

- Creating artificial systems that can comprehend the world like humans

One of the key challenges to improve the accuracy of the algorithm is training stable diffusion on a larger dataset of brain scans. As the technology advances, it will likely lead to a major revolution in human-machine interfaces.

Brain-Computer Interfaces: The Next Hardware Interface

Several startups are already developing devices that can read thoughts and translate them into text messages, or even control virtual environments with the power of the mind. Companies like Next Mind and Microsoft are actively working on non-invasive brain-computer interfaces (BCIs), believing that controlling devices with thoughts will be the next major hardware interface.

This shift in human-machine interaction will have significant implications for how we communicate, work, and create art. Non-invasive BCIs offer a safer and more practical alternative to invasive BCIs, which require drilling a hole in the skull to read thoughts with greater precision.

Video by Anastasi In Tech

Research paper:

https://www.biorxiv.org/content/10.1101/2022.11.18.517004v3.full.pdf

Conclusion

As neuroscience and AI continue to develop, the ability to read our minds no longer seems out of reach. With non-invasive BCIs on the horizon, we are on the cusp of a revolution in human-machine interfaces, transforming how we interact with our devices and the world around us.