The realm of 3D graphics has witnessed a remarkable evolution, particularly in the techniques used for rendering complex scenes. This article delves into the intricacies of three pivotal technologies that have shaped the landscape: NeRF (Neural Radiance Fields), ADOP (Approximate Differentiable One-Pixel Point Rendering), Gaussian Splatting, and TRIPS (Trilinear Point Splatting for Real-Time Radiance Field Rendering). Each represents a leap forward in our quest to create ever more realistic virtual worlds.

Neural Radiance Fields (NeRF)

NeRF emerged as a groundbreaking approach, transforming a collection of 2D images into a navigable 3D scene. It uses a neural network to learn a scene’s high-resolution 3D representation, enabling the rendering of images from different viewpoints with astonishing detail and photorealism. The technology has found applications across various domains, from virtual reality to autonomous navigation.

Instant NeRF: The Next Step

A notable advancement in NeRF technology is Instant NeRF. Developed by NVIDIA, it significantly speeds up the process, training on a few dozen photos in seconds and rendering the 3D scene in milliseconds. This rapid rendering capability opens up new possibilities for real-time applications and could revolutionize 3D content creation.

ADOP: Approximate Differentiable One-Pixel Point Rendering

ADOP, which stands for Approximate Differentiable One-Pixel Point Rendering, is a point-based, differentiable neural rendering pipeline introduced by Darius Rückert, Linus Franke, and Marc Stamminger. This system is designed to take calibrated camera images and a proxy geometry of the scene, typically a point cloud, as input. The point cloud is then rasterized with learned feature vectors as colors, and a deep neural network is employed to fill in the gaps and shade each output pixel.

The rasterizer in ADOP renders points as one-pixel splats, which is not only very fast but also allows for efficient computation of gradients with respect to all relevant input parameters. This makes it particularly suitable for applications that require real-time rendering rates, even for models with well over 100 million points.

Moreover, ADOP includes a fully differentiable physically-based photometric camera model, which encompasses exposure, white balance, and a camera response function. By following the principles of inverse rendering, ADOP refines its input to minimize inconsistencies and optimize the quality of its output. This includes optimizing structural parameters like camera pose, lens distortions, point positions, and features, as well as photometric parameters such as camera response function, vignetting, and per-image exposure and white balance.

Due to its ability to handle input images with varying exposure and white balance smoothly, and its capability to generate high-dynamic range output, ADOP represents a significant advancement in the field of neural rendering. If you’re interested in computer graphics, especially alternatives to Gaussian splatting, ADOP’s approach to point rasterization and scene refinement could be quite relevant to your work or research.

Gaussian Splatting

Moving to traditional methods, Gaussian Splatting stands as a tried-and-tested technique for volume rendering and point-based graphics. It projects 3D data onto a 2D plane using Gaussian distributions, creating smooth transitions and rendering volumetric data like medical scans with impressive clarity.

Recent Developments

Recent advancements have introduced 3D Gaussian Splatting (3DGS), which accelerates rendering speeds and provides an explicit representation of scenes. This facilitates dynamic reconstruction and editing tasks, pushing the boundaries of what can be achieved with traditional splatting methods.

TRIPS: The Frontier of Real-Time Rendering

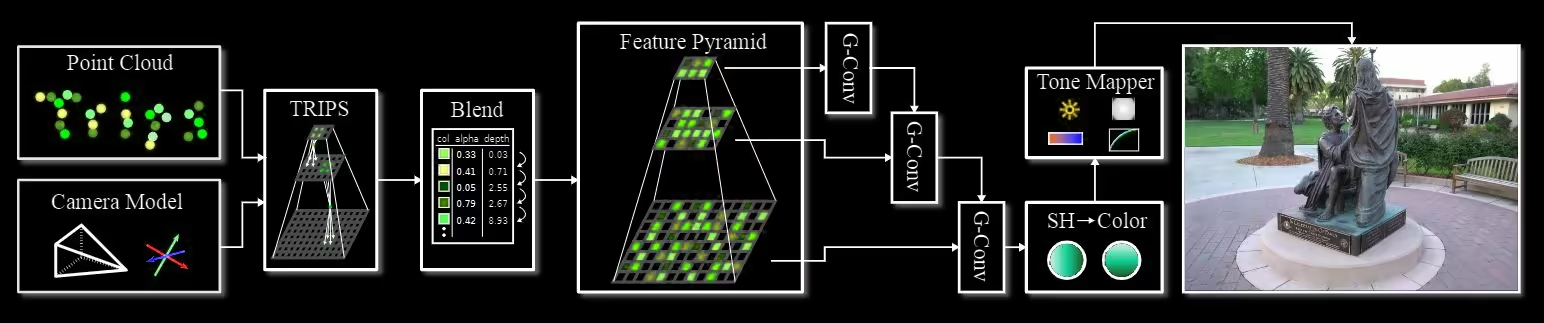

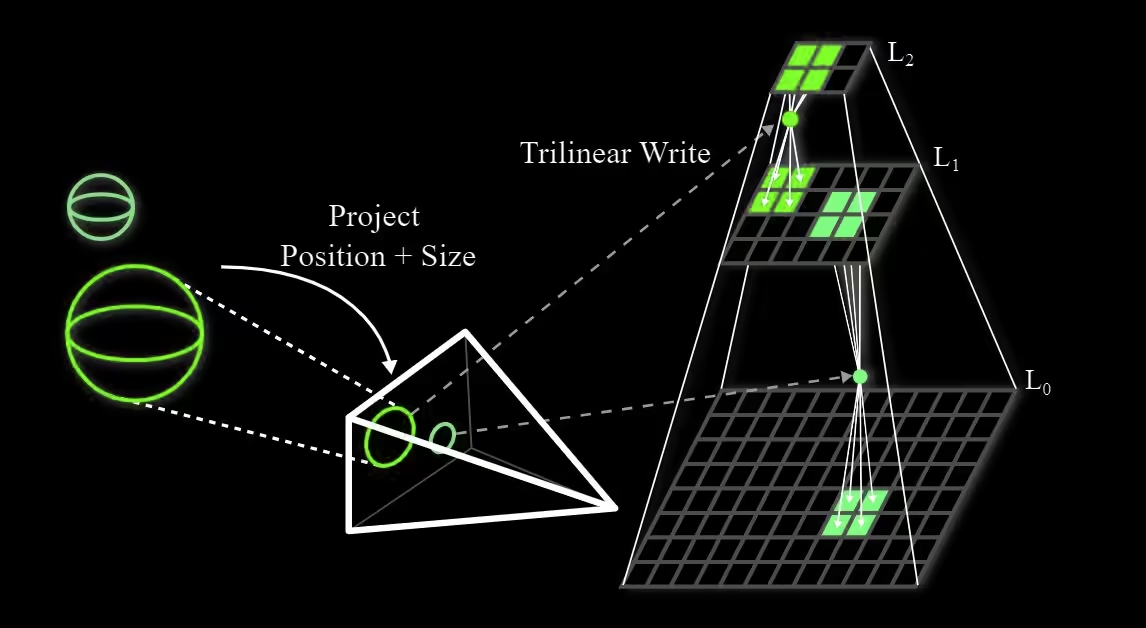

TRIPS represents the cutting edge, combining the strengths of Gaussian Splatting and ADOP (Adaptive Density Point Clouds). It rasterizes points into a screen-space image pyramid, allowing for the rendering of large points with a single trilinear write. A lightweight neural network then reconstructs a detailed, hole-free image.

Why TRIPS Stands Out

- Real-Time Performance: TRIPS maintains a 60 fps rate on standard hardware, making it suitable for real-time applications.

- Differentiable Render Pipeline: The pipeline’s differentiability means point sizes and positions can be optimized automatically, enhancing the quality of the rendered scene.

- Quality in Challenging Scenarios: TRIPS excels in rendering complex geometries and expansive landscapes, providing better temporal stability and detail than previous methods.

TRIPS Pipeline

TRIPS Resources

Wrapping Up

The journey from NeRF to TRIPS encapsulates the rapid progress in 3D scene rendering. As we move towards more efficient and high-fidelity methods, the potential for creating immersive virtual experiences becomes increasingly tangible. These technologies not only push the envelope in graphics but also pave the way for innovations in various industries, from entertainment to urban planning.

For those seeking to delve deeper into these technologies, a wealth of resources is available, including comprehensive reviews and open-source platforms that facilitate the development of NeRF projects. The future of 3D rendering is bright, and it is technologies like NeRF, Gaussian Splatting, and TRIPS that will illuminate the path forward.